1.0 FPGA AI Suite SoC Design Example Quick Start Tutorial¶

The FPGA AI Suite Design Example User Guides describe the design and implementation for accelerating AI inference using the FPGA AI Suite, Intel® Distribution of OpenVINO™ toolkit, and various development boards (depending on the design example). They share a common introduction between each document, which serves as an introduction to the material. Section 4.0 begins the ED specific material.

About the FPGA AI Suite Documentation Library¶

Documentation for the FPGA AI Suite is split across a few publications. Use the following table to find the publication that contains the FPGA AI Suite information that you are looking for:

Table 1. FPGA AI Suite Documentation Library¶

| Title and Description | Link |

|---|---|

| Release Notes Provides late-breaking information about the FPGA AI Suite including new features, important bug fixes, and known issues. |

Link |

| FPGA AI Suite Handbook Get up and running with the FPGA AI Suite by learning how to initialize your compiler environment and reviewing the various design examples and tutorials provided with the FPGA AI Suite Describes the use modes of the graph compiler ( dla_compiler). It also provides details about the compiler command options and the format of compilation inputs and outputs. |

Link |

| AN 1008: Using the FPGA AI Suite Docker Image Describes how to install and run the FPGA AI Suite Docker image with a Docker client running on a Microsoft* Windows* system. The containerized FPGA AI Suite enables easy and quick access to the various tools in FPGA AI Suite. Provides an overview of the FPGA AI Suite IP and the parameters you can set to customize it. This document also covers the FPGA AI Suite IP generation utility. |

Link |

2.0 FPGA AI Suite Design Examples¶

The following is a comprehensive list of the available FPGA AI Suite Design Example User Guides.

Table 2. FPGA AI Suite Design Examples Descriptions¶

| Design Example | Description |

|---|---|

| PCIe-attach design example | Demonstrates how OpenVINO toolkit and the FPGA AI Suite support the look-aside deep learning acceleration model. This design example targets the Terasic* DE10-Agilex Development Board (DE10-Agilex-B2E2). |

| OFS PCIe-attach design example | Demonstrates the OpenVINO toolkit and the FPGA AI Suite that target Open FPGA Stack (OFS)-based boards. This design example targets the following Open FPGA Stack (OFS)-based boards: * Agilex™ 7 FPGA I-Series Development Kit ES2 (DK-DEV-AGI027RBES) * Intel FPGA SmartNIC N6001-PL Platform (without Ethernet controller) |

| Hostless DDR-Free design examples | Demonstrates hostless DDR-free operation of the FPGA AI Suite IP. Graph filters, bias, and FPGA AI Suite IP configurations are stored in internal memory on the FPGA device. This design example targets the Agilex 7 FPGA I-Series Development Kit ES2 (DK-DEV-AGI027RBES). |

| Hostless JTAG design example | Demonstrates the step-by-step sequence of configuring FPGA AI Suite IP and starting inference by writing into CSRs directly via JTAG. This design example targets the Agilex 5 FPGA E-Series 065B Modular Development Kit (MK-A5E065BB32AES1). |

| SoC design example | Demonstrates how OpenVINO toolkit and the FPGA AI Suite support the CPU-offload deep-learning acceleration model in an embedded system. The design example targets the following development boards: * Agilex 7 FPGA I-Series Transceiver-SoC Development Kit (DK-SIAGI027FC) * Arria® 10 SX SoC FPGA Development Kit (DK-SOC-10AS066S) |

Table 3. FPGA AI Suite Design Examples Properties Overview¶

| Example | Design Type | Target FPGA Device | Host | Memory | Stream* | Design Example Identifier** | Supported Development Kit |

|---|---|---|---|---|---|---|---|

| PCIe-Attached | Agilex 7 | External host processor | DDR | M2M | agx7_de10_pcie | Terasic DE10-Agilex Development Board (DE10-Agilex-B2E2) | |

| PCIe-Attached | Agilex 7 | External host processor | DDR | M2M | agx7_iseries_ofs_pcie | Agilex 7 FPGA I-Series Development Kit ES2 (DK-DEV-AGI027RBES) | |

| PCIe-Attached | Agilex 7 | External host processor | DDR | M2M | agx7_n6001_ofs_pcie | Intel FPGA SmartNIC N6001-PL Platform (without Ethernet controller) | |

| Hostless DDR-Free | Agilex 7 | Hostless | DDR-Free | Direct | agx7_iseries_ddrfree | Agilex 7 FPGA I-Series Development Kit ES2 (DK-DEV-AGI027RBES) | |

| Hostless JTAG Attached | Agilex 5 | Hostless | DDR | M2M | agx5e_modular_jtag | Agilex 5 FPGA E-Series 065B Modular Development Kit (MK-A5E065BB32AES1) | |

| SoC | Agilex 7 | On-device HPS | DDR | M2M and S2M | agx7_soc_m2m agx7_soc_s2m |

Agilex 7 FPGA I-Series Transceiver-SoC Development Kit (DK-SIAGI027FC) | |

| SoC | Arria 10 | On-device HPS | DDR | M2M and S2M | a10_soc_m2m a10_soc_s2m |

Arria 10 SX SoC FPGA Development Kit (DK-SOC-10AS066S) | |

| SoC | Agilex 5 | On-device HPS | DDR | M2M and S2M | agx5_soc_m2m agx5_soc_s2m |

Agilex 5 FPGA E-Series 065B Modular Development Kit (MK-A5E065BB32AES1) |

*For the Design Example Identifier column, these entries are the value to use with the FPGA AI Suite Design Example Utility (dla_build_example_design.py) command to build the design example

**For the Stream column, the entries are defined as follows:

M2M FPGA AI Suite runtime software running on the external host transfers the image (or data) to the FPGA DDR memory.

S2M Streaming input data is copied to FPGA on-device memory. The FPGA AI Suite runtime runs on the FPGA device (HPS or RTL state machine). The runtime is used only to coordinate the data transfer from FPGA DDR memory into the FPGA AI Suite IP.

Direct Data is streamed directly in and out of the FPGA on-chip memory.

3.0 Shared Design Example Components¶

3.1. FPGA AI Suite Design Example Utility¶

The main entry point into the example design build system is the FPGA AI Suite design example utility (dla_build_example_design.py). This utility coordinates different aspects of building a design example, from the initial project configuration to extracting area metrics after a compile completes. With this utility, you can create bitstreams from custom AI Suite IP architecture files and with a configurable number of instances.

Note: There is no '.py' extension on dla_build_example_design when using the FPGA AI Suite on Windows.

To use the FPGA AI Suite design example build utility, ensure that your local development environment has been setup according to the steps in "Installing FPGA AI Suite Compile and IP Generation Tools" in FPGA AI Suite Handbook.

3.1.1. The dla_build_example_design.py Command¶

The FPGA AI Suite design example utility (dla_build_example_design.py) configures and compiles the bitstreams for the FPGA AI Suite design examples. The command has the following basic syntax:

where [action] is one of the following actions:

Table 4. Design Example Utility Actions¶

| Action | Description |

|---|---|

| list | List the available example designs. |

| build | Build an example design. |

| qor | Generate QoR reports. |

| quartus-compile | Run a Quartus® Prime compile. |

| scripts | Managed the build support scripts. |

Some of the command actions have different additional required and optional parameters. Use the command help to see a list of available options for the command and its actions.

By default, the dla_build_example_design.py command always instructs the dla_create_ip command to create licensed IP. If no license can be found, inference-limited, unlicensed RTL is generated. The build log indicates if the IP is licensed or unlicensed. For more information about licensed and unlicensed IP, refer to "The --unlicensed/--licensed Options" in FPGA AI Suite IP Reference Manual.

Getting dla_build_example_design.py Command Help¶

For general help with the command and to see the list of available actions, run the following command:

For help with a command actions and to the list of available options and arguments required for an action, run the following command:

Command Logging and Debugging¶

By default, the FPGA AI Suite design example utility logs its output to a set location in the (default) build_platform directory. Override this location by specifying the --logfile logfile option.

For extra debugging output, specify the --debug option.

Both the logging and debugging options must be specified before the command action.

3.1.2. Listing Available FPGA AI Suite Design Examples¶

To list the available FPGA AI Suite design examples, run the following command:

This command shows the design example identifiers (used with the build action of the design example utility) along with a short description of the design example and its target Quartus Prime version.

A list of the design examples and their identifiers is also available in FPGA AI Suite Design Examples Properties Overview.

3.1.3. Building FPGA AI Suite Design Examples¶

To build FPGA AI Suite design examples, specify the build option of the design example utility.

In the most simple case of building a design example for an existing architecture, you can build a design example with the following command:

For example, use the following command to build the Agilex 7 PCIe-based design example that targets the DE10-Agilex-B2E2 board using the AGX7_Generic architecture:

dla_build_example_design.py build \

agx7_de10_pcie \

$COREDLA_ROOT/example_architectures/AGX7_Generic.arch

The default build directory is build_platform. The utility creates the folder if it does not exist. To specify a different build directory, specify the --output-dir <directory> option:

dla_build_example_design.py build \

--output-dir <directory> \

<design_example_identifier> \

<architecture file>

Be default, the utility also prevents the build directory from being overwritten. You can override this behavior with the --force option.

After the build is complete, the build directory has the following files and folders:

- coredla_ip/

This folder contains the RTL for the configured FPGA AI Suite IP.

- hw/

This folder contains the Quartus Prime or Open FPGA Stack (OFS) project files. It also includes its own self-contained copy of the contents of thecoredla_ip/folder

- .build.json

This contents of this file (sometimes referred to as the "build context" file) allow the build to be split into multiple steps.

- Reports

The build directory will contain any log files generated by the build utility (such asbuild.log) and the QoR summary that is generated by a successful compilation.

- Bitstreams

This build directory will contain the bitstreams to program the target FPGA device as follows:- For OFS-based designs,

.gbsfiles. - For other designs,

.sofand.rbffiles.

- For OFS-based designs,

3.1.3.1. Staging FPGA AI Suite Design Example Builds¶

The FPGA AI Suite design example utility supports staged builds via the --skip-compile option. When you specify this option, the utility creates the build folder and prepares the Quartus Prime or Open FPGA Stack (OFS) project but does not run compilation.

With a staged design example build flow, you must run the utility a few times to generate the same outputs as the regular build flow. For a complete build with a staged build flow, you would run the following commands:

dla_build_example_design.py build --skip-compile \

<design_example_identifier> \

<architecture file>

dla_build_example_design.py quartus-compile build_platform

# Optional: Generate QoR summary

dla_build_example_design.py qor build_platform

Information about the build configuration, namely what was passed into the dla_build_example_design.py build command, is stored inside of the build context (.build.json) file.

You can run the dla_build_example_design.py quartus-compile and dla_build_example_design.py qor commands multiple times. An example of when running these commands multiple times can be useful is when you have to recompile the bitstream after removing an unnecessary component from the design example.

You can also directly call the design compilation script. An FPGA AI Suite design example uses one of the following scripts, depending on whether the design example can built in a WSL 2 environment:

- generate_sof.tcl

Design examples with this design compilation script can be built in a WSL 2 environment.

- build_project.sh

Design examples with this design compilation script cannot be built in a WSL 2 environment.

If a design example uses a generate_sof.tcl script, then you can invoke the design compilation script either after opening the design example project in Quartus Prime or by running the following command:

If a design example uses the build_project.sh build script, the script must be executed in a Bash-compatible shell. For example:

For both design example compilation scripts, the current working directory must be the hw/ directory.

3.1.3.2. WSL 2 FPGA AI Suite Design Example Builds¶

Staged builds allow the build utility to support hybrid Windows/Linux compilation in a WSL 2 environment on a Windows system. In a WSL 2 environment, FPGA AI Suite is installed in the WSL 2 Linux guest operating system while Quartus Prime is installed in the Windows host operating system.

Restriction: Only a subset of the FPGA AI Suite design examples can be build in a WSL 2 environment. For a list of design examples that support WSL 2, run the following command:

When you run the dla_build_example_design.py build command, the WSL 2 flow is enabled with the --wsl option. This option tells the utility to resolve the path to the build directory as a Windows file path instead of a Linux file path. The utility displays messages that provide you with more details about how to use the staged build commands to complete the compilation in your WSL 2 environment.

Important: If you specify a build directory in a WSL 2 environment with the --output-dir options, that build directory must be a relative path. This requirement is due to a limitation in how the WSL 2 environment maps paths between the Linux guest and Windows.

3.2. Example Architecture Bitstream Files¶

The FPGA AI Suite provides example Architecture Files and bitstreams for the design examples. The files are located in the FPGA AI Suite installation directory.

3.3. Design Example Software Components¶

The design examples contain a sample software stack for the runtime flow.

For a typical design example, the following components comprise the runtime stack:

- OpenVINO Toolkit (Inference Engine, Heterogeneous Plugin)

- FPGA AI Suite runtime plugin

- Vendor-provided FPGA board driver or OPAE driver (depending on the design example)

The design example contains the source files and Makefiles to build the FPGA AI Suite runtime plugin. The OpenVINO component (and OPAE components, where used) is external and must be manually preinstalled.

A separate flow compiles the AI network graph using the FPGA AI Suite compiler, as shown in Figure 1 Software Stacks for FPGA AI Suite Inference that follows as the Compilation Software Stack.

The compilation flow output is a single binary file called CompiledNetwork.bin that contains the compiled network partitions for FPGA and CPU devices along with the network weights. The network is compiled for a specific FPGA AI Suite architecture and batch size. This binary is created on-disk only when using the Ahead-Of-Time flow; when the JIT flow is used, the compiled object stays in-memory only.

An Architecture File describes the FPGA AI Suite IP architecture to the compiler. You must specify the same Architecture File to the FPGA AI Suite compiler and to the FPGA AI Suite design example utility (dla_build_example_design.py).

The runtime flow accepts the CompiledNetwork.bin file as the input network along with the image data files.

Figure 1. Software Stacks for FPGA AI Suite Inference¶

The runtime stack cannot program the FPGA with a bitstream. To build a bitstream and program the FPGA devices:

- Compile the design example.

- Program the device with the bitstream.

Instructions for these steps are provided in the sections for each design example.

To run inference through the OpenVINO Toolkit on the FPGA, set the OpenVINO device configuration flag (used by the heterogeneous Plugin) to FPGA or HETERO:FPGA,CPU.

3.3.1. OpenVINO FPGA Runtime Overview¶

The purpose of the runtime front end is as follows:

- Provide input to the FPGA AI Suite IP

- Consume output from the FPGA AI Suite IP

- Control the FPGA AI Suite IP

Typically, this front-end layer provides the following items:

- The

.archfile that was used to configure the FPGA AI Suite on the FPGA. - The ML model (possibly precompiled into an Ahead-of-Time

.binfile by the FPGA AI Suite compiler (dla_compiler)). - A target device that is passed to OpenVINO

The target device may instruct OpenVINO to use the HETERO plugin, which allows a graph to be partitioned onto multiple devices.

One of the directories provided in the installation of the FPGA AI Suite is the runtime/ directory. In this directory, the FPGA AI Suite provides the source code to build a selection of OpenVINO applications. The runtime/ directory also includes the dla_benchmark command line utility that you can use to generate inference requests and benchmark the inference speed.

The following applications use the OpenVINO API. They support the OpenVINO HETERO plugin, which allows portions of the graph to fall-back onto the CPU for unsupported graph layers.

dla_benchmark(adapted from OpenVINO benchmark_app)classification_sample_asyncobject_detection_demo_yolov3_asyncsegmentation_demo

Each of these applications serve as a runtime executable for the FPGA AI Suite. You might want to write your own OpenVINO-based front ends to wrap the FPGA plugin. For information about writing your own OpenVINO-based front ends, refer to the OpenVINO documentation.

Some of the responsibilities of the OpenVINO FPGA plugin are as follows:

- Inference Execution

- Mapping inference requests to an IP instance and internal buffers

- Executing inference requests via the IP, managing synchronization and all data transfer between host and device.

- Input / Output Data Transform

- Converting the memory layout of input/output data

- Converting the numeric precision of input/output data

3.3.2. OpenVINO FPGA Runtime Plugin¶

The FPGA runtime plugin uses the OpenVINO Inference Engine Plugin API.

The OpenVINO Plugin architecture is described in the OpenVINO Developer Guide for Inference Engine Plugin Library.

The source files are located under runtime/plugin. The three main components of the runtime plugin are the Plugin class, the Executable Network class, and the Inference Request class. The primary responsibilities for each class are as follows:

Plugin class

- Initializes the runtime plugin with an FPGA AI Suite architecture file which you set as an OpenVINO configuration key (refer to PCIE - Running the Ported OpenVINO Demonstration Applications).

- Contains

QueryNetworkfunction that analyzes network layers and returns a list of layers that the specified architecture supports. This function allows network execution to be distributed between FPGA and other devices and is enabled with the HETERO mode.

- Creates an executable network instance in one of the following ways:

- Just-in-time (JIT) flow: Compiles a network such that the compiled network is compatible with the hardware corresponding to the FPGA AI Suite architecture file, and then loads the compiled network onto the FPGA device.

- Ahead-of-time (AOT) flow: Imports a precompiled network (exported by FPGA AI Suite compiler) and loads it onto the FPGA device.

Executable Network Class

- Represents an FPGA AI Suite compiled network

- Loads the compiled model and config data for the network onto the FPGA device that has already been programmed with an FPGA AI Suite bitstream. For two instances of FPGA AI Suite, the Executable Network class loads the network onto both instances, allowing them to perform parallel batch inference.

- Stores input/output processing information.

- Creates infer request instances for pipelining multiple batch execution.

Infer Request class

- Runs a single batch inference serially.

- Executes five stages in one inference job – input layout transformation on CPU, input transfer to DDR, FPGA AI Suite FPGA execution, output transfer from DDR, output layout transformation on CPU.

- In asynchronous mode, executes the stages on multiple threads that are shared across all inference request instances so that multiple batch jobs are pipelined, and the FPGA is always active.

3.3.3. FPGA AI Suite Runtime¶

The FPGA AI Suite runtime implements lower-level classes and functions that interact with the memory-mapped device (MMD). The MMD is responsible for communicating requests to the driver, and the driver connects to the BSP, and ultimately to the FPGA AI Suite IP instance or instances.

The runtime source files are located under runtime/coredla_device. The three most important classes in the runtime are the Device class, the GraphJob class, and the BatchJob class.

Device class

- Acquires a handle to the MMD for performing operations by calling

aocl_mmd_open.

- Initializes a DDR memory allocator with the size of 1 DDR bank for each FPGA AI Suite IP instance on the device.

- Implements and registers a callback function on the MMD DMA (host to FPGA) thread to launch FPGA AI Suite IP for batch=1 after the batch input data is transferred from host to DDR.

- Implements and registers a callback function (interrupt service routine) on the MMD kernel interrupt thread to service interrupts from hardware after one batch job completes.

- Provides the

CreateGraphJobfunction to create a GraphJob object for each FPGA AI Suite IP instance on the device.

- Provides the

WaitForDla(instance id) function to wait for a batch inference job to complete on a given instance. Returns instantly if the number of batch jobs finished (that is, the number of jobs processed by interrupt service routine) is greater than number of batch jobs waited for this instance. Otherwise, the function waits until interrupt service routine notifies. Before returning, this function increments the number of batch jobs that have been waited for this instance.

GraphJob class

- Represents a compiled network that is loaded onto one instance of the FPGA AI Suite IP on an FPGA device.

- Allocates buffers in DDR memory to transfer configuration, filter, and bias data.

- Creates BatchJob objects for a given number of pipelines and allocates input and output buffers for each pipeline in DDR.

BatchJob class

- Represents a single batch inference job.

- Stores the DDR addresses for batch input and output data.

- Provides

LoadInputFeatureToDdrfunction to transfer input data to DDR and start inference for this batch asynchronously.

- Provides

ReadOutputFeatureFromDdrfunction to transfer output data from DDR. Must be called after inference for this batch is completed.

3.3.4. FPGA AI Suite Custom Platform¶

Figure 2. Overview of FPGA AI Suite MMD Runtime¶

The interface to the user-space portion of the BSP drivers is centralized in the MmdWrapper class, which can be found in the file $COREDLA_ROOT/runtime/coredla_device/inc/mmd_wrapper.h. This file is a wrapper around the MMD API.

The FPGA AI Suite runtime uses this wrapper so that the runtime can be reused on all platforms. When porting the runtime to a new board, you must ensure that each of the member functions in MmdWrapper calls into a board-specific implementation function. You must also modify the runtime build process and adjacent code.

Any implementation of a runtime for the FPGA AI Suite must support the following features via the MMD Wrapper:

- Open the device

- Register an interrupt service routine

- Read/write 32-bit register values in the IP control-and-status register (CSR)

- Transfer bulk data between the host and device

3.3.5. Memory-Mapped Device (MMD) Driver¶

The FPGA AI Suite runtime MMD software uses a driver to access and interact with the FPGA device. To integrate the FPGA AI Suite IP into your design on your platform, the MMD layer must interface with the hardware using the appropriate drivers (such as OPAE, UIO, or a custom driver). For example, the PCIe-based design example uses the drivers provided by the OpenCL board support package (BSP) for the Terasic DE10-Agilex Development Board.

If your board vendor provides a BSP, you can use the MMD Wrapper to interface the BSP with the FPGA AI Suite IP. Review the following sections for examples of adapting a vendor-provided BSP to use with the FPGA AI Suite IP:

You can create a custom BSP for your board, but that process can be complex and can require more work.

The FPGA AI Suite runtime MMD software uses a driver to access and interact with the FPGA device. This driver is supplied as part of the board vendor BSP or, for OFS-based boards, the OPAE driver.

The source files for the MMD driver are provided in runtime/coredla_device/mmd. The source files contain classes for managing and accessing the FPGA device by using driver-supplied functions for reading/writing to CSR, reading/writing to DDR, and handling kernel interrupts.

Obtaining BSP Drivers¶

Contact your FPGA board vendor for information about the BSP for your FPGA board.

Obtaining the OPAE Drivers¶

Contact your FPGA board vendor for information about the OPAE driver for your FPGA board.

For the FPGA AI Suite OFS for PCIe attach design example, the OPAE driver is installed when you follow the steps in Getting Started with Open FPGA Stack (OFS) for PCIe-Attach Design Examples.

3.3.6. FPGA AI Suite Runtime MMD API¶

This section describes board-level functions that are defined in the mmd_wrapper.cpp file. Your implementation of the functions in the mmd_wrapper.cpp file for your specific board may differ. For examples of these functions, refer to the provided MMD implementations under $COREDLA_ROOT/runtime/coredla_device/mmd/.

The mmd_wrapper.cpp file contains the following MMD functions that are adapted from the Open FPGA Stack (OFS) accelerator support package (ASP) functions of the same name. For more information about these functions, refer to the OFS AFS Memory Mapped Device Layer documentation.

Although several of the functions in the FPGA AI Suite MMD API share names and intended behavior with OpenCL MMD API functions, you do not need to use an OpenCL BSP. The naming convention is maintained for historical reasons only.

The mmd_wrapper.cpp file contains the following functions provided only with the FPGA AI Suite:

- dla_mmd_get_max_num_instances

- dla_mmd_get_ddr_size_per_instance

- dla_mmd_get_coredla_clock_freq

- dla_mmd_get_ddr_clock_freq

- dla_mmd_csr_read

- dla_mmd_csr_write

- dla_mmd_ddr_read

- dla_mmd_ddr_write

- dla_is_stream_controller_valid

- dla_mmd_stream_controller_read

- dla_mmd_stream_controller_write

The dla_mmd_get_max_num_instances Function¶

Returns the maximum number of FPGA AI Suite IP instances that can be instantiated on the platform. In the FPGA AI Suite PCIe-based design examples, this number of IP instances that can be instantiated is the same as the number of external memory interfaces (for example, DDR memories).

Syntax

The dla_mmd_get_ddr_size_per_instance Function¶

Returns the maximum amount of external memory available to each FPGA AI Suite IP instance.

Syntax

The dla_mmd_get_coredla_clock_freq Function¶

Given the device handle, return the FPGA AI Suite IP PLL clock frequency in MHz. Return a negative value if there is an error.

In the PCIe-based design example, this value is determined by allowing a set amount of wall clock time to elapse between reads of counters onboard the IP.

Syntax

The dla_mmd_get_ddr_clock_freq Function¶

Returns the DDR clock frequency, in Mhz. Check the documentation from your board vendor to determine this value.

Syntax

The dla_mmd_csr_read Function¶

Performs a control status register (CSR) read for a given instance of the FPGA AI Suite IP at a given address. The result is stored in the data directory.

Syntax

The dla_mmd_csr_write Function¶

Performs a control status register (CSR) write for a given instance of the FPGA AI Suite IP at a given address.

Syntax

The dla_mmd_ddr_read Function¶

Performs an external memory read for a given instance of the FPGA AI Suite IP at a given address. The result is stored in the data directory.

Syntax

The dla_mmd_ddr_write Function¶

Performs an external memory write for a given instance of the FPGA AI Suite IP at a given address.

Syntax

The dla_is_stream_controller_valid Function¶

Optional. Required if STREAM_CONTROLLER_ACCESS is defined.

Queries the streaming controller device to see if it is valid.

Syntax

For more information about the stream controller module, refer to [SOC] Stream Controller Communication Protocol.

The dla_mmd_stream_controller_read Function¶

Optional. Required if STREAM_CONTROLLER_ACCESS is defined.

Reads an incoming message from the streaming controller.

Syntax

int dla_mmd_stream_controller_read(int handle, int instance, uint64_t addr, uint64_t length, void* data)

For more information about the streaming controller device, refer to [SOC] Stream Controller Communication Protocol.

The dla_mmd_stream_controller_write Function¶

Optional. Required if STREAM_CONTROLLER_ACCESS is defined.

Writes an outgoing message from the streaming controller.

Syntax

int dla_mmd_stream_controller_write(int handle, int instance, uint64_t addr, uint64_t length, const void* data)

For more information about the streaming controller device, refer to [SOC] Stream Controller Communication Protocol.

3.3.7. Board Support Package (BSP) Overview¶

Every FPGA platform consists of the FPGA fabric and the hard IP that surrounds it. For example, an FPGA platform might provide an external memory interface as hard IP to provide access to external DDR memory. Soft logic that is synthesized on the FPGA fabric needs to be able to communicate with the hard IP blocks, and the implementation details are typically platform-specific.

A board support package (BSP) typically consists of two parts:

- A software component that runs in the host operating system.

This component includes the MMD and operating system driver for the board.

- A hardware component that is programming into the FPGA fabric.

This component consists of soft logic that enables the use of the FPGA peripheral hard IP blocks around the FPGA fabric. This component acts as the bridge between the FPGA AI Suite IP block in the FPGA fabric and the hard IP blocks.

Depending on your board and board vendor, you can have the following options for obtaining a BSP:

- If your board supports the Open FPGA Stack (OFS), you can use (and adapt, if necessary) an OFS reference design or FPGA interface manager (FIM).

For some boards, there are precompiled FIM reference designs available.

- Obtain a BSP directly from your board vendor. You board vendor might have multiple BSPs available for you board.

- Create your own BSP.

For a BSP to be compatible with FPGA AI Suite, the BSP must provide the following capabilities:

- Enable the FPGA AI Suite IP and the host to interface with the external memory interface IP.

- Enable the FPGA AI Suite IP to interface with the runtime (for example, PCIe IP for the PCIe-based design example, or the HPS-to-FPGA AXI bridge for the SoC design example).

- Enable the FPGA AI Suite IP to send interrupts to the runtime. If your BSP does not support this capability, you must use polling to determine when an inference is complete.

- Enable the host to access the FPGA AI Suite IP CSR.

The BSPs available for the boards supported by the FPGA AI Suite design example support these capabilities.

Related Information

Open FPGA Stack (OFS) documentation.

3.3.7.1. Terasic DE10-Agilex Development Board BSP Example¶

For the Agilex 7 PCIe-based design example on the Terasic DE10-Agilex Development Board, the BSP provided by Terasic is adapted to work with the FPGA AI Suite IP. The Terasic-provided BSP is OpenCL™-based.

The following diagram shows the high-level interactions between the FPGA interface IPs on the platform, and the a custom OpenCL kernel. The different colors in the diagram indicate different clock domains.

Figure 3. Terasic BSP with OpenCL Kernel¶

The PCIe hard IP can read/write to the DDR4 external memory interface (EMIF) via the DMA and the Arbitrator. Additional logic is provided to handle interrupts from the custom IP and propagate them back to the host through the PCIe interface.

The following diagram hows how the Terasic DE10-Agilex Development Board BSP can be adapted to support the FPGA AI Suite IP.

Figure 4. Terasic BSP With FPGA AI Suite IP¶

Platform Designer automatically adds clock-domain crossings between Avalon memory-mapped interfaces and AXI4 interfaces, making the integration with the BSP easier.

For a custom platform, consider following a similar approach of modifying the BSP provided by the vendor to integrate in the FPGA AI Suite IP.

3.3.7.2. Agilex 7 PCIe-Attach OFS-based BSP Example¶

For OFS-based devices, the BSP consists of a platform-specific FPGA interface manager (FIM) and a platform-agnostic accelerator functional unit (AFU).

The FPGA AI Suite OFS for PCIe attach design example supports Agilex 7 PCIe Attach OFS.

You can obtain the source files needed to build a Agilex 7 PCIe Attach FIM or obtain prebuillt FIMs for some boards from OFS Agilex 7 PCIe Attach FPGA Development Directory in GitHub.

The AFU wraps the FPGA AI Suite IP and must meet the following general requirements:

- The AFU must include an instance of the FPGA AI Suite IP.

- The AFU must support host access (for example, via DMA) to external memory that is shared with the FPGA AI Suite IP.

- The AFU must propagate interrupts from the FPGA AI Suite IP to the host.

- The AFU Must support host access to the FPGA AI Suite IP CSR memory.

If you are creating your own FPGA AI Suite AFU, consider starting with an AFU example design that implements some of the required functionality. Some examples designs and what they are offer are as follows:

- For an example of enabling direct memory access so the host can access DDR memory, review the direct memory access (DMA) AFU example on GitHub

- For an example of interrupt handling, review the oneAPI Accelerator Support Package (ASP) on GitHub.

- For an example MMD implementation, review the oneAPI Accelerator Support Package (ASP) on GitHub.

Related Information

- Direct memory access (DMA) AFU example on GitHub

- oneAPI accelerator support package (ASP) on GitHub

- Agilex 7 PCIe Attach OFS documentation

- Agilex 7 PCIe Attach OFS Workload Development Guide

4.0 FPGA AI Suite SoC Design Example Prerequisites¶

The SoC design example requires one of the following development kits: * Agilex 5 FPGA E-Series 065B Modular Development Kit (MK-A5E065BB32AES1) This development kit features and Agilex 5 E-Series devices (OPN: A5ED065BB32AE6S-R0).

* *For more details about this development kit, refer to the following URL: [https://www.intel.com/content/www/us/en/products/details/fpga/development-kits/agilex/a5e065b-modular.html](https://www.intel.com/content/www/us/en/products/details/fpga/development-kits/agilex/a5e065b-modular.html).*

- Agilex 7 FPGA I-Series Transceiver-SoC Development Kit (DK-SI-AGI027FC) This development kit features an Agilex 7 I-Series SoC device with 4 F-Tiles (OPN: AGIB027R31B1E1V).

* Important: The FPGA AI Suite requires DDR4 memory with an x8 or higher component data width. The RAM device provided with the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit provides only a x4 component data width. For more details and recommended RAM modules, refer to Agilex 7 FPGA I-Series Transceiver-SoC Development Kit Hardware Requirements

For more details about this development kit, refer to the following URL: https://www.intel.com/content/www/us/en/products/details/fpga/development-kits/agilex/si-agi027.html

- Arria 10 SX SoC FPGA Development Kit (DK-SOC-10AS066S) This development kit features an Arria 10 SX 660 device (OPN: 10AS066N3F40E2SG) with a “ -2” speed grade with the included DDR4 HILO memory cards. * *For more details about this development kit, refer to the following URL: https://www.intel.com/content/www/us/en/products/details/fpga/developmentkits/arria/10-sx.html*

In addition, the following hardware components are required: * SDHC flash card, class 10 speed or faster (minimum 2 GB but 4 GB or more is recommended) * Mini-USB cable suitable for connecting the development board to a host PC * Ethernet cable suitable for connecting the development board to a network to provide access from a host PC on the same network

The host PC must use a supported operating system (Red Hat* Enterprise Linux* 8, Ubuntu* 20.04, or Ubuntu 22.04), and must have an internet connection to install the software dependencies.

To build bitstreams, Quartus Prime Pro Edition Version 25.3 must be installed on the host system.

Although the development host system does not need to be the same as the system used to build packages and bitstreams, this guide does not explicitly cover the scenario where they are distinct.

4.1 Agilex 7 FPGA I-Series Transceiver-SoC Development Kit Hardware Requirements¶

The FPGA AI Suite SoC design example requires x8 (or wider) DDR4 memory.

The RAM module provided with the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit does not support the FPGA AI Suite SoC design example because the included RAM module provides only an x4 width.

The design example has been verified on a development kit fitted with a Kingston* x8 RDIMM (KSM32RS8/16MFR). Intel recommends using this memory module to help you successfully use the design example.

4.2 FPGA AI Suite SoC Design Example Quick Start Tutorial¶

The SoC design example quick start tutorial provides instructions to do the following tasks:

- Build a bitstream and flash card image for the FPGA development kit.

- Run the

dla_benchmarkutility from the example runtime on the SoC FPGA HPS (Arm processor) host. This example runtime uses the memory-to-memory (M2M) model. - Run the streaming image application that streams data from the HPS Arm processor host to the FPGA device in a way that mimics how data is streamed from any other input source (such as Ethernet, HDMI, or MIPI). This streaming image application uses the steaming-to-memory (S2M) model.

The FPGA AI Suite SoC design example shows how the Intel Distribution of OpenVINO toolkit and the FPGA AI Suite support the CPU-offload deep learning acceleration model in an embedded system

The SoC design examples are implemented with the following components:

- FPGA AI Suite IP

- Intel Distribution of OpenVINO toolkit

- The community-supported OpenVINO ARM plugin

- Sample hardware and software systems that illustrate the use of these components

- Arm*-Linux build scripts built using Yocto frameworks for the hard processor systems (HPSs) on the following FPGA SoC devices:

- Agilex 5 E-Series SoC

- Agilex 7 I-Series SoC

- Arria 10 SX SoC

For an easier initial experience, these design examples include prebuilt FPGA bitstreams and a Linux-compiled system image that correspond to pre-optimized FPGA AI Suite architecture files.

You can copy this disk-image to an SD card and insert the card into a supported FPGA development kit. Additionally, you can use the design example scripts to choose from a variety of architecture files and build (or rebuild) your own bitstreams, subject to IP licensing limitations.

This quick start tutorial assumes that you have reviewed the following sections in the FPGA AI Suite Getting Started Guide:

SoC Design Example Quick Start Tutorial Prerequisites¶

Before you start the tutorial ensure that you have successfully completed the installation tasks outlined in “Installing the FPGA AI Suite Compiler and IP Generation Tools” in the FPGA AI Suite Handbook.

The remaining sections of the FPGA AI Suite Getting Started Guide can help you understand the overall flow of using the FPGA AI Suite, but they are not required to complete this quick start tutorial.

In this section, some overlay architecture (.arch) files referred to in the instructions include the suffix “LayoutTransform”. This indicates that the FPGA AI Suite internal layout transform (described in the FPGA AI Suite IP Reference Manual) is enabled. On Agilex 7 devices, this internal layout transform must be enabled for S2M operation, and is optional for M2M operation.

4.2.1 Initial Setup¶

The quick start tutorial instructions assume that you have initialized your environment for the FPGA AI Suite with the init_env.sh script from a shell that is compatible with the Bourne shell (sh).

The FPGA AI Suite init_env.sh script might already be part of your shell login script. If not, then use the following command to initialize your shell environment:

This command assumes that the FPGA AI Suite is installed in the default location. If you are using an FPGA AI Suite version other than 2025.3, adjust the path to script accordingly.

4.2.2 Initializing a Work Directory¶

While you can build the design example directly in the $COREDLA_ROOT location, it is better to use a work directory. You can create a work directory as follows:

If you created a work directory while following the instructions in the FPGA AI Suite Getting Started Guide, the dla_init_local_directory.sh script prompts you to use the coredla_work.sh script instead to set the $COREDLA_WORK environment variable.

4.2.3 (Optional) Create an SD Card Image (.wic)¶

An SD card provides the FPGA bitstream and HPS disk image to the SoC FPGA development kit. You can build your own SD card image or use the prebuilt image provided by the FPGA AI Suite SoC design example.

If you want to use the prebuilt image, skip this section and go to Writing the SD Card Image (.wic) to an SD Card.

Important: You cannot build the SD card as root due to security checks in the BitBake tool used when creating an SD card image.

4.2.3.1 Installing Prerequisite Software for Building an SD Card Image¶

Building the SD card image requires the following additional software:

- Quartus Prime Pro Edition Version 25.3

- Ashling* RiscFree* IDE for Altera®

- (Ubuntu only) Ubuntu package

libncurses5PATH

If you did not install Quartus Prime Pro Edition Version 25.3 when following the instructions in the FPGA AI Suite Getting Started Guide, you must install it now.

Building the SD card image also requires tools provided by Ashling RiscFree IDE for Altera. You can install Ashling RiscFree IDE from a separate installation package or part of your Quartus Prime bundled installation package.

You can download the required software from the following URL: https://www.altera.com/downloads/fpga-development-tools/quartus-prime-pro-edition-design-software-version-25-3-linux.

To install the prerequisite software for building an SD card image:

- Install Quartus Prime Pro Edition and Ashling RiscFree IDE for Altera

-

(Ubuntu only) Install Ubuntu package libcurses with the following command:

-

Ensure that the QUARTUS_ROOTDIR environment variable is set properly:

If the QUARTUS_ROODIR is not set, run the following command:

If you chose install Quartus Prime in a location other than the default location, adjust the path in export command to match your Quartus Prime installation location

-

Ensure your

$PATHenvironment variable includes paths to the installed Quartus Prime and Ashling RiscFree IDE binaries. Adjust the following commands appropriately if you did not install into the default location: -

Confirm that Quartus Prime Pro Edition Version 25.3 is installed by running the following command:

Related Information

4.2.4 Building the FPGA Bitstreams¶

The FPGA AI Suite SoC design example also includes prebuilt demonstration FPGA bitstreams. If you want to use the prebuilt demonstration bitstreams in your SD card image, skip ahead to Installing HPS Disk Image Build Prerequisites.

If you build your own bitstreams and do not have an FPGA AI Suite IP license, then your bitstream have a limit of 10000 inferences. After 10000 inferences, the unlicensed IP refuses to perform any additional inference. To reset the limit, reprogram the FPGA device.

Building the FPGA Bitstream for the Agilex 5 FPGA E-Series 065B Modular Development Kit¶

To build the FPGA bitstream for the, Agilex 5 FPGA E-Series 065B Modular Development Kit run the following command:

dla_build_example_design.py build \

--output-dir $COREDLA_WORK/agx5_perf_bitstream \

-n 1 \

agx5_soc_s2m \

$COREDLA_ROOT/example_architectures/AGX5_Performance.arch

Building the FPGA Bitstream for the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit¶

To build the FPGA bitstream for the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit, run the following command:

dla_build_example_design.py build \

--output-dir $COREDLA_WORK/agx7_perf_bitstream \

-n 1 \

agx7_soc_s2m \

$COREDLA_ROOT/example_architectures/AGX7_Performance_LayoutTransform.arch

The bitstream built by this command supports both the M2M execution model and the S2M execution model.

This design example bitstream is built with a “LayoutTransform” architecture because the design example uses the FPGA AI Suite IP internal layout transform rather than an external layout transform for converting image buffers to the target memory format. The layout transform is required for S2M bitstreams, but is optional for M2M bitstreams. For more information about the layout transform hardware, refer to “Input Feature Tensor In-Memory Format” in FPGA AI Suite Handbook.

Building the FPGA Bitstream for the Arria 10 SX SoC FPGA Development Kit¶

To build the FPGA bitstream for the Arria 10 SX SoC FPGA Development Kit, run the following command:

dla_build_example_design.py build \

--output-dir $COREDLA_WORK/a10_perf_bitstream \

-n 1 \

a10_soc_s2m \

$COREDLA_ROOT/example_architectures/A10_Performance.arch

The bitstream built by this command supports both the M2M execution model and the S2M execution model.

4.2.5 Installing HPS Disk Image Build Prerequisites¶

The process to build the HPS disk image has additional prerequisites. To install these prerequisites, follow the instructions for your operating system in the following sections:

- Red Hat Enterprise Linux 8 Prerequisites

- Red Hat Enterprise Linux 9 Prerequisites

- Ubuntu 20 Prerequisites

- Ubuntu 22 Prerequisites

- Ubuntu 24 Prerequisites

Red Hat Enterprise Linux 8 Prerequisites¶

To install the prerequisites for Red Hat Enterprise Linux 8:

-

Enable additional Red Hat* Enterprise Linux 8 repository and package manager:

-

Install the dependency packages:

sh sudo dnf install gawk wget git diffstat unzip texinfo gcc gcc-c++ make \ chrpath socat cpio python3 python3-pexpect xz iputils python3-jinja2 \ mesa-libEGL SDL xterm python3-subunit rpcgen zstd lz4 perl-open.noarch \ perl-Thread-Queue numactl-devel cmake git curl graphviz gcc gcc-c++ redhat-lsb \ tbb-devel gflags-devel boost-devel ninja-build -

Install packages required to create the flash card image and FPGA AI Suite runtime and dependencies:

cd /tmp mkdir uboot_tools && cd uboot_tools wget https://kojipkgs.fedoraproject.org/\ vol/fedora_koji_archive02/packages/uboot-tools/2018.03/3.fc28/x86_64/\ uboot-tools-2018.03-3.fc28.x86_64.rpm sudo dnf install ./uboot-tools-2018.03-3.fc28.x86_64.rpm sudo dnf install ninja-build fakeroot sudo python3 -m pip install pylint passlib scons -

Install CMake Version 3.16.3 or later:

-

Install Make Version 4.3 or later:

-

Add the /sbin directory to your

$PATHenvironment variable:

Red Hat Enterprise Linux 9 Prerequisites¶

To install the prerequisites for Red Hat Enterprise Linux 9:

-

Enable additional Red Hat* Enterprise Linux 9 repository and package manager:

-

Install the dependency packages:

sh sudo dnf install gawk wget git diffstat unzip texinfo gcc gcc-c++ make \ chrpath socat cpio python3 python3-pexpect xz iputils python3-jinja2 \ mesa-libEGL SDL xterm python3-subunit rpcgen zstd lz4 perl-open.noarch \ perl-Thread-Queue numactl-devel cmake git curl graphviz gcc gcc-c++ \ tbb-devel gflags-devel boost-devel ninja-build -

Install packages required to create the flash card image and FPGA AI Suite runtime and dependencies:

cd /tmp mkdir uboot_tools && cd uboot_tools wget https://kojipkgs.fedoraproject.org/\ vol/fedora_koji_archive02/packages/uboot-tools/2018.03/3.fc28/x86_64/\ uboot-tools-2018.03-3.fc28.x86_64.rpm sudo dnf install ./uboot-tools-2018.03-3.fc28.x86_64.rpm sudo dnf install ninja-build fakeroot sudo python3 -m pip install pylint passlib scons -

Install CMake Version 3.16.3 or later:

-

Install Make Version 4.3 or later:

-

Add the /sbin directory to your

$PATHenvironment variable:

Ubuntu 20 Prerequisites¶

To install the prerequisites for Ubuntu 20:

-

Install the dependency packages:

sudo apt install gawk wget git diffstat unzip texinfo gcc build-essential \ chrpath socat cpio python3 python3-pip python3-pexpect xz-utils debianutils \ iputils-ping python3-git python3-jinja2 libegl1-mesa libsdl1.2-dev pylint3 \ xterm python3-subunit mesa-common-dev zstd liblz4-tool device-tree-compiler \ mtools libnuma-dev cmake git curl graphviz unzip lsb libtbb-dev libgflags-dev libboost-all-dev ninja-build -

Install packages required to create the flash card image and FPGA AI Suite runtime and dependencies:

-

Add the /sbin directory to your

$PATHenvironment variable:

Ubuntu 22 Prerequisites¶

To install the prerequisites for Ubuntu 22:

-

Install the dependency packages:

sh sudo apt install gawk wget git diffstat unzip texinfo gcc build-essential \ chrpath socat cpio python3 python3-pip python3-pexpect xz-utils debianutils \ iputils-ping python3-git python3-jinja2 libegl1-mesa libsdl1.2-dev xterm \ python3-subunit mesa-common-dev zstd liblz4-tool device-tree-compiler mtools \ libnuma-dev cmake git curl graphviz unzip lsb libtbb-dev libgflags-dev libboost-all-dev ninja-build -

Install packages required to create the flash card image and FPGA AI Suite runtime and dependencies:

-

Add the /sbin directory to your

$PATHenvironment variable:

Ubuntu 24 Prerequisites¶

To install the prerequisites for Ubuntu 24:

-

Install the dependency packages:

sh sudo apt install gawk wget git diffstat unzip texinfo gcc build-essential \ chrpath socat cpio python3 python3-pip python3-pexpect xz-utils debianutils \ iputils-ping python3-git python3-jinja2 libegl1-mesa libsdl1.2-dev xterm \ python3-subunit mesa-common-dev zstd liblz4-tool device-tree-compiler mtools \ libnuma-dev cmake git curl graphviz unzip libtbb-dev libgflags-dev libboost-all-dev ninja-build -

Install packages required to create the flash card image and FPGA AI Suite runtime and dependencies:

-

Add the /sbin directory to your

$PATHenvironment variable:

4.2.6 (Optional) Downloading the ImageNet Categories¶

By default, the S2M streaming app prints the category associated with each image after inference.

Optionally, you can use human-readable category names as follows:

- Download the list of ImageNet categories from a source such as the following URL: https://github.com/xmartlabs/caffeflow/blob/master/examples/imagenet/imagenet-classes.txt

- Place the contents into the following file:

4.2.7 Building the SD Card Image¶

The SD card image contains a Yocto Project embedded Linux system, HPS packages, and the FPGA AI Suite runtime.

Building the SD card image requires a minimum of 100GB of free disk space.

The SD card image is build with the create_hps_image.sh command, which does the following steps for you:

- Build a Yocto Project embedded Linux system.

- Build additional packages required by the SoC design example runtime, including the OpenVINO and OpenCV runtimes.

- Build the design example runtime.

- Combine all of these items and FPGA bitstreams into an SD card image using wic.

- Place the SD card image in the specified output directory.

For more details about the create_hps_image.sh command, refer to Building the Bootable SD Card Image (.wic).

To build the SD card image, run the following commands:

-

Agilex 5 FPGA E-Series 065B Modular Development Kit (MK-A5E065BB32AES1)

-

Agilex 7 FPGA I-Series Transceiver-SoC Development Kit

-

Arria 10 SX SoC FPGA Development Kit

If the command returns errors such as “bitbake: command not found”, try deleting the $COREDLA_WORK/runtime/build_Yocto/ directory before rerunning the create_hps_image.sh command.

4.3 Writing the SD Card Image (.wic) to an SD Card¶

Before running the demonstration, you must create a bootable SD card for the FPGA development kit. You can use either the precompiled SD card image or an SD card image that you created.

The precompiled SD card image (.wic) is in the following location:

- Agilex 5 FPGA E-Series 065B Modular Development Kit (MK-A5E065BB32AES1) $COREDLA_ROOT/demo/ed4/agx5_soc_s2m/sd-card/coredla-image-agilex5_mk_a5e065bb32aes1.wic

- Agilex 7 FPGA I-Series Transceiver-SoC Development Kit $COREDLA_ROOT/demo/ed4/agx7_soc_s2m/sd-card/coredla-imageagilex7_ dk_si_agi027fa.wic

- Arria 10 SX SoC FPGA Development Kit $COREDLA_ROOT/demo/ed4/a10_soc_s2m/sd-card/coredla-image-arria10.wic

If you built your own SD card image following the instructions in (Optional) Create an SD Card Image (.wic), then your SD card image is located in the directory that you specified for the -o option of the create_hps_image.sh command.

To write the SD card image to an SD card:

-

Determine the device associated with the SD card on the host by running the following command before and after inserting the SD card:

Typical locations for the SD card include

/dev/sdbor/dev/sdc. The rest of these instruction use/dev/sdxas the SD card location. -

Use the

ddcommand to write the SD card image as follows:

After the SD card image is written, insert the SD card into the development kit SD card slot.

If you want to use a Microsoft Windows system to write the SD card image to the SD card, refer to the GSRD manuals available at the following URL: https://www.rocketboards.org/foswiki/Documentation/GSRD.

4.4 Preparing SoC FPGA Development Kits for the FPGA AI Suite SoC Design Example¶

To prepare an FPGA development kit for the FPGA AI Suite SoC design example:

- Prepare one of the supported development kits: - Prepare the Agilex 5 FPGA E-Series 065B Modular Development Kit. - Prepare the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit. - Prepare the Arria 10 SX SoC FPGA Development Kit.

- Configure the SoC FPGA development kit UART connection.

- Determine the SoC FPGA development kit IP address.

4.4.1 Preparing the Agilex 5 FPGA E-Series 065B Modular Development Kit¶

Prepare the Agilex 5 FPGA E-Series 065B Modular Development Kit for the FPGA AI Suite SoC design example with the following steps:

- Confirming the Agilex 5 FPGA E-Series 065B Modular Development Kit Board Setup.

- Programming the FPGA device on the board in one of the following ways:

- Programming the Agilex 5 FPGA Device with the JTAG Indirect Configuration (

.jic) File. This method programs the QSPI flash memory, which then programs the FPGA device when the board is powered up. With this method, the FPGA programming can be persisted between board power cycles. This method is preferred for deployment or testing the other parts of your application after your FPGA bitstream is finalized. - Programming the Agilex 5 FPGA Device with the SRAM Object File (.sof). This method programs the FPGA device directly. The FPGA programming is not persisted between board power cycles. This method is typically faster than programming the QSPI flash memory with.jicfile that then programs the FPGA device. This method is preferred when developing or debugging your FPGA bitstream. - Connecting the Agilex 5 FPGA E-Series 065B Modular Development Kit to the Host Development System.

4.4.1.1 Confirming the Agilex 5 FPGA E-Series 065B Modular Development Kit Board Setup¶

Confirm the board settings as follows:

-

Ensure that the Agilex 5 FPGA E-Series 065B Modular Development Kit DIP switch and jumpers are set to their default settings. For this design example, you change the settings for some DIP switches depending on what are doing with the board: - For programming the FPGA device on the board, you will set the S4 DIP switch for JTAG mode. - For booting the FPGA device from flash memory, you will set the S4 DIP switch for QSPI mode. - To get power over the ATX connector, ensure that the SW2 switch is set to ATX power mode.

For more details about default DIP switch and jumper settings, refer to “Default Settings” in the Agilex 5 FPGA E-Series 065B Modular Development Kit User Guide.

-

Ensure that the SD card with the programmed Yocto image is installed on the board.

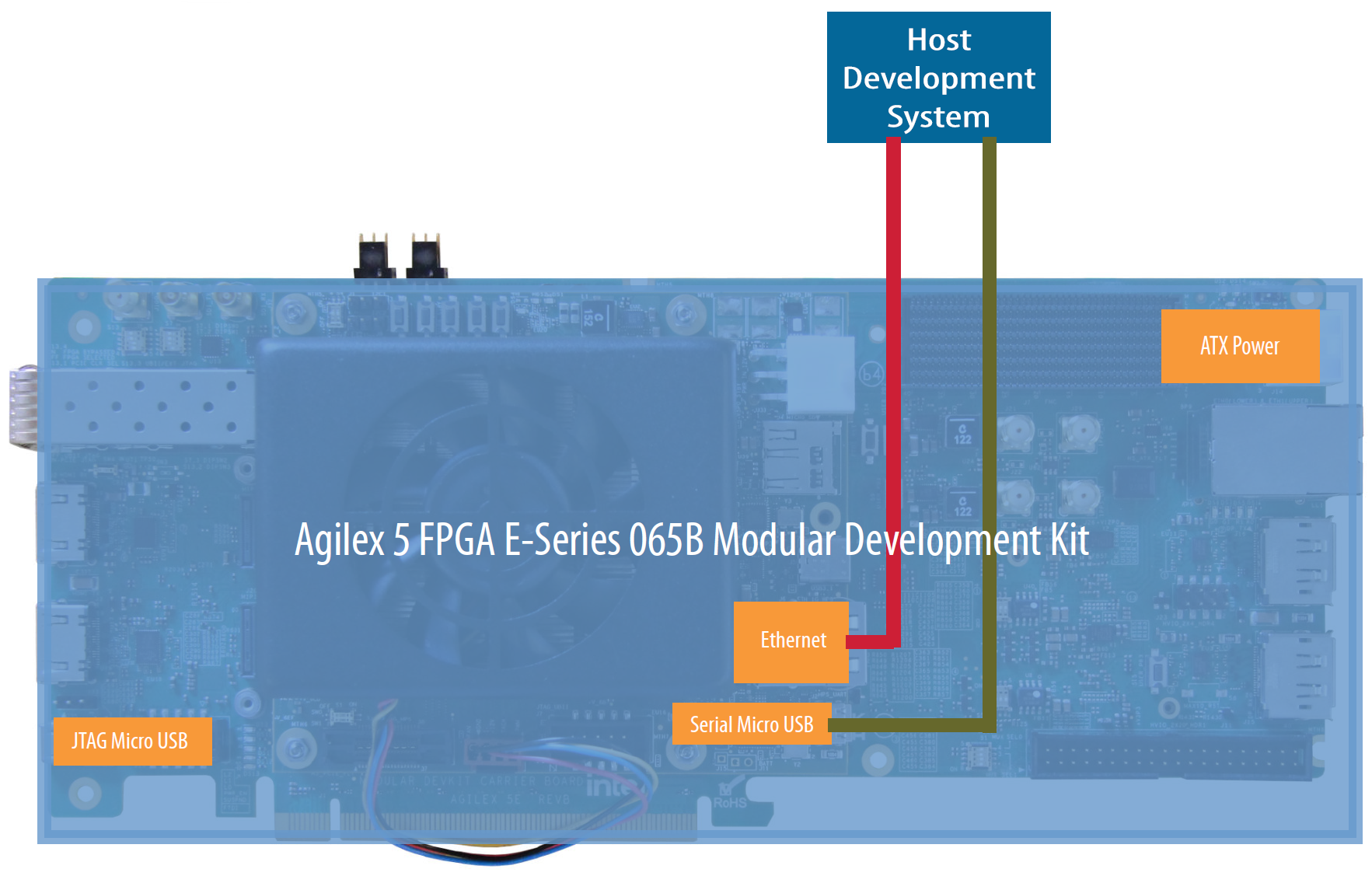

When configured and connected, the Agilex 5 FPGA E-Series 065B Modular Development Kit should resemble the following image:

The board connections serve the following purposes:

- The JTAG micro USB connector is used to program the FPGA device.

- The Ethernet connector is used for fast data transfer to the HPS.

- The serial micro USB connector is used as follows:

- To monitor the serial output from the HPS during operation.

- To provide command-line input to the HPS during operation.

4.4.1.2 Programming the Agilex 5 FPGA Device with the JTAG Indirect Configuration (.jic) File¶

Programming the Agilex 5 device with the JTAG indirect configuration (.jic) file programs the QSPI flash memory and allows the FPGA device to be automatically configured when power is applied to the board.

To program the Agilex 5 FPGA device with the JTAG indirect configuration (.jic) file:

-

Connect the Agilex 5 FPGA E-Series 065B Modular Development Kit to your host development system via JTAG micro USB connection as shown in the following diagram:

-

Switch the board into JTAG mode by setting the S4[1:2] DIP switch to OFF/OFF:

-

Program the QSPI with the

.jicfile by running the following commands on the host development system:cd $COREDLA_ROOT/demo/ed4/agx5_soc_s2m/sd-card/ quartus_pgm -m jtag -o "pvi;u-boot-spl-dtb.hex.jic@<device_number>"where <device_number> is 1 or 2, depending on whether the HPS is already running (that is, the prior state of the device). Use 1 if the HPS is not running, and 2 if the HPS is already running. If you do not know the state of the device, try 1. If that fails, try 2.

-

Switch the board into QSPI mode by setting the S4[1:2] DIP switch to ON/ON and cycle the power to the board.

At boot time, the Agilex 5 FPGA device is configured from the QSPI flash memory.

4.4.1.3 Programming the Agilex 5 FPGA Device with the SRAM Object File (.sof)¶

Programming the Agilex 5 device with the SRAM object file (.sof) programs FPGA device directly. The FPGA configuration is lost when power is removed from the board.

To program the Agilex 5 FPGA device with the SRAM object file (.sof):

-

Connect the Agilex 5 FPGA E-Series 065B Modular Development Kit to your host development system via JTAG micro USB connection as shown in the following diagram:

-

Switch the board into JTAG mode by setting the S4[1:2] DIP switch to OFF/OFF:

-

Program the FPGA device

.soffile by running the following commands on the host development system:

The Agilex 5 FPGA device now boots. The device will lose its configuration when you remove power from the board.

4.4.1.4 Connecting the Agilex 5 FPGA E-Series 065B Modular Development Kit to the Host Development System¶

Connect the Agilex 5 FPGA E-Series 065B Modular Development Kit to your host development system via Ethernet and serial micro USB UART connections as shown in the following diagram:

There are 4 COM ports on the one USB connection. The COM port connected to the HPS should be the 3rd available COM port.

4.4.2 Preparing the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit¶

Prepare the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit for the FPGA AI Suite SoC design example with the following steps:

- Confirming Agilex 7 FPGA I-Series Transceiver-SoC Development Kit Board Set Up.

- Programming the FPGA device on the board in one of the following ways:

- Programming the Agilex 7 FPGA Device with the JTAG Indirect Configuration (

.jic) File. This method programs the QSPI flash memory, which then programs the FPGA device when the board is powered up. With this method, the FPGA programming can be persisted between board power cycles. This method is preferred for deployment or testing the other parts of your application after your FPGA bitstream is finalized. - Programming the Agilex 7 FPGA Device with the SRAM Object File (.sof). This method programs the FPGA device directly. The FPGA programming is not persisted between board power cycles. This method is typically faster than programming the QSPI flash memory with.jicfile that then programs the FPGA device. This method is preferred when developing or debugging your FPGA bitstream. - Connecting the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit to the Host Development System.

4.4.2.1 Confirming Agilex 7 FPGA I-Series Transceiver-SoC Development Kit Board Set Up¶

Confirm the board settings as follows:

- Ensure that the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit DIP switch and jumpers are set to their default settings. For this design example, you change the settings for the S9 DIP switch depending on what are doing with the board: - For programming the FPGA device on the board, you will set the S9 DIP switch for JTAG mode. - For booting the FPGA device from flash memory, you will set the S9 DIP switch for QSPI mode.

For more details about default DIP switch and jumper settings, refer to “Default Settings” in the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit User Guide.

-

Ensure that the HPS IO48 OOBE daughter card is installed in connector J4 on the development kit, and the SD card with the programmed Yocto image is installed in the daughter card.

-

Ensure that the DDR4 x8 RDIMM is installed in the PCIe slot furthest from the fan. For RDIMM requirements, refer to Agilex 7 FPGA I-Series Transceiver-SoC Development Kit Hardware Requirements.

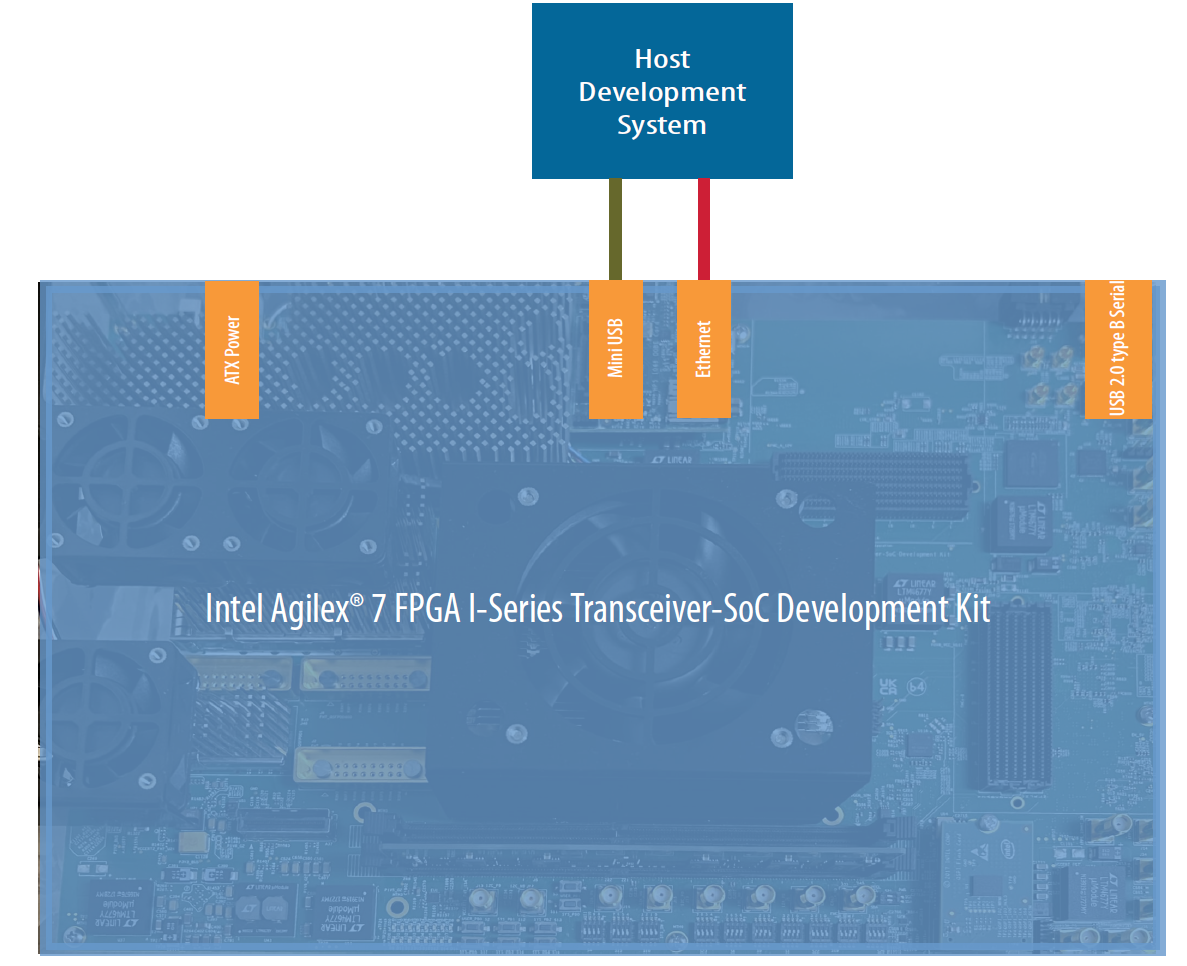

When configured and connected, the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit should resemble the following image:

The board connections serve the following purposes:

- The USB 2.0 connector is used to program the FPGA device.

- The Ethernet connector is used for fast data transfer to the HPS.

- The micro USB connector is used as follows: - To monitor the serial output from the HPS during operation. - To provide command-line input to the HPS during operation.

4.4.2.2 Programming the Agilex 7 FPGA Device with the JTAG Indirect Configuration (.jic) File¶

Programming the Agilex 7 device with the JTAG indirect configuration (.jic) file programs the QSPI flash memory and allows the FPGA device to be automatically configured when power is applied to the board.

To program the Agilex 7 FPGA device with the JTAG indirect configuration (.jic) file:

-

Connect the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit to your host development system via USB 2.0 connection as shown in the following diagram:

-

Switch the board into JTAG mode by setting the S9[1:4] DIP switch to ON/ON/ON/OFF:

-

Program the QSPI with the

.jicfile by running the following commands on the host development system:cd $COREDLA_ROOT/demo/ed4/agx7_soc_s2m/sd-card/ quartus_pgm -m jtag -o "pvi;u-boot-spl-dtb.hex.jic@<device_number>"where <device_number> is 1 or 2, depending on whether the HPS is already running (that is, the prior state of the device). Use 1 if the HPS is not running, and 2 if the HPS is already running. If you do not know the state of the device, try 1. If that fails, try 2.

-

Switch the board into QSPI mode by setting the S9[1:4] DIP switch to ON/OFF/OFF/OFF and cycle power to the board:

At boot time, the Agilex 7 FPGA device is configured from the QSPI flash memory.

4.4.2.3 Programming the Agilex 7 FPGA Device with the SRAM Object File (.sof)¶

Programming the Agilex 7 device with the SRAM object file (.sof) programs FPGA device directly. The FPGA configuration is lost when power is removed from the board.

To program the Agilex 7 FPGA device with the SRAM object file (.sof):

-

Connect the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit to your host development system via USB 2.0 connection as shown in the following diagram:

-

Switch the board into JTAG mode by setting the S9[1:4] DIP switch to ON/ON/ON/OFF:

-

Program the FPGA device

.soffile by running the following commands on the host development system:

The Agilex 7 FPGA device now boots. The device will lose its configuration when you remove power from the board.

4.4.2.4 Connecting the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit to the Host Development System¶

Connect the Agilex 7 FPGA I-Series Transceiver-SoC Development Kit to your host development system via Ethernet and USB UART connections as shown in the following diagram:

4.4.3 Preparing the Arria 10 SX SoC FPGA Development Kit¶

To prepare the Arria 10 SX SoC FPGA Development Kit for the FPGA AI Suite SoC design example:

- Confirming Arria 10 SX SoC FPGA Development Kit Board Settings.

- Connecting the Arria 10 SX SoC FPGA Development Kit to the Host Development System.

4.4.3.1 Confirming Arria 10 SX SoC FPGA Development Kit Board Settings¶

Confirm the board settings as follows:

-

Ensure that the Arria 10 SX SoC FPGA Development Kit has the required DIP switch and jumper settings. The SoC example design requires that all DIP switches have their default settings except for SW2 switches 5, 6, 7, and 8, which should be switched ON:

For more details about default DIP switch and jumper settings, refer to Arria 10 SoC Development Kit User Guide.

-

Ensure that the HILO cards are fitted correctly.

The Arria 10 SX SoC FPGA Development Kit includes two DDR4 HILO cards: the HPS memory (1GB) and the FPGA memory (2GB). Both the HPS Memory and FPGA Memory DDR4 HILO modules must be fitted as shown in the following image:

4.4.3.2 Connecting the Arria 10 SX SoC FPGA Development Kit to the Host Development System¶

Connect the Arria 10 SX SoC FPGA Development Kit to your host development system via Ethernet and USB UART connections as shown in the following diagram:

4.4.4 Configuring the SoC FPGA Development Kit UART Connection¶

The SoC FPGA development kit boards have USB-to-serial converters that allows the host computer to see the board as a virtual serial port:

- The Agilex 5 FPGA E-Series 065B Modular Development Kit has a FTDI USB-to-serial converter chip.

- The Agilex 7 FPGA I-Series Transceiver-SoC Development Kit has a USB-to-serial converter on the IO48 daughter card.

- The Arria 10 SX SoC FPGA Development Kit (DK-SOC-10AS066S) has a FTDI USB-to-serial converter chip.

Ubuntu, Red Hat Enterprise Linux, and other modern Linux distributions have built-in drivers for the FTDI USB-to-serial converter chip, so no driver installation is necessary on those platforms.

On Microsoft Windows, the Windows SoC EDS installer automatically installs the necessary drivers. For details, see the SoC GSRD for your SoC FPGA development kit at the following URL: https://www.rocketboards.org/foswiki/Documentation/GSRD

The serial communication parameters are as follows:

- Baud rate: 115200

- Parity: None

- Flow control: None

- Stop bits: 1

On Windows, you can use utilities such as TeraTerm or PuTTY to connect the board. You can configure these utilities from their tool menus.

On Linux, you can use the Minicom utility. Configure the Minicom utility as follows:

-

Determine the device name associated with the virtual serial port on your host development system. The virtual serial port is typically named

/dev/ttyUSB0.a. Before connecting the mini USB cable to the SoC FPGA development kit, determine which USB serial devices are installed with the following command:

b. Connect the mini USB cable from the SoC FPGA development kit to the host development system.

c. Confirm the new device connection with the ls command again:

-

If you do not have the Minicom application installed on the host development system, install it now.

- On Red Hat Enterprise Linux 8:

sudo yum install minicom - On Ubuntu: use

sudo apt-get install minicom

- On Red Hat Enterprise Linux 8:

-

Configure Minicom as follows:

a. Start Minicom:

b. Under Serial Port Setup choose the following:

- Serial Device: /dev/ttyUSB0 (Change this value to match the system value that you found earlier, if needed)

- Bps/Par/Bits: 115200 8N1

- Hardware Flow Control: No

- Software Flow Control: No

Press [ESC] to return to the main configuration menu.

c. Select Save Setup as dfl to save the default setup. Then select Exit.

4.4.5 Determining the SoC FPGA Development Kit IP Address¶

To determine the FPGA development kit IP address:

- Open a Terminal session to the FPGA development kit via the UART connection and log in using the user name root and no password.

Starting Record Runlevel Change in UTMP…

[ OK ] Finished Record Runlevel Change in UTMP.

9.553286] random: crng init done

[ OK ] Finished Load/Save Random Seed.

[ 10.084845] socfpga-dwmax ff800000.ethernet eth0: Link is Up – 1Gbps/Full – flow control off

[ 10.103287] IPv6: ADDRCONF(NETDEV_CHANGE): eth0: link becomes ready

Poky (Yocto Project Reference Distro) 4.0.2 arria10-62747948036a ttyS0

arria10-62747948036a login:

- Issue a hostname command to display the network name of the FPGA development kit board:

In this example, the network name of the board is arria10-62747948036a.

Tip: You need this hostname later on to open an SSH connection to the FPGA development kit.

- Confirm that you have a connection to the development kit from the development host with the ping command. Append the

.localto the host name when you issue thepingcommand:

build-host:$ ping arria10-62747948036a.local -c4

PING arria10-62747948036a (192.168.0.23) 56(84) bytes of data.

64 bytes of data from arria10-62747948036a (192.168.0.23): icmp_seq=1 ttl=63 time=1.66ms

64 bytes of data from arria10-62747948036a (192.168.0.23): icmp_seq=1 ttl=63 time=1.66ms

64 bytes of data from arria10-62747948036a (192.168.0.23): icmp_seq=1 ttl=63 time=1.66ms

64 bytes of data from arria10-62747948036a (192.168.0.23): icmp_seq=1 ttl=63 time=1.66ms

--- arria10-62747948036a ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3005ms

rtt min/avg/max/mdev = 1.664/2.037/2.283/0.238 ms

You can use the host name when you need to transfer files to the running system by appending the .local to the host name. For example, for the host name arria10-62747948036a, you can use arria10-62747948036a.local.

4.5 Adding Compiled Graphs (AOT files) to the SD Card¶

An AOT file contains instructions for the FPGA AI Suite IP to "execute" in order to perform inference. For Agilex 5 and Arria 10, the M2M design variant and the S2M design variant require different AOT files. The instructions in this section create both AOT files.

For Agilex 7, the M2M design variant and the S2M design variant use the same AOT file.

To add the compiled graphs to the development kit SD card:

Tip: If you completed the FPGA AI Suite Quick Start Tutorial in the FPGA AI Suite Getting Started Guide, you can skip steps 1-3.

- Create the

$COREDLA_WORKdirectory, if you have not already done so. - Prepare OpenVINO Model Zoo and Model Optimizer.

-

Tip: If you completed the FPGA AI Suite Quick Start Tutorial in the FPGA AI Suite Getting Started Guide, you have already completed these first three steps.

-

Confirm that you have the following directory:

If you do not have this directory, confirm that you have completed the first three steps.

-

Compile the graphs.

- Copy the compiled graphs to the SD card.

Related Information FPGA AI Suite Quick Start Tutorial

4.5.1 Preparing OpenVINO Model Zoo¶

These instructions assume that you have a copy of OpenVINO Model Zoo 2024.6 in your $COREDLA_WORK/demo/open_model_zoo/ directory.

To download a copy of Model Zoo, run the following commands:

cd $COREDLA_WORK/demo

git clone https://github.com/openvinotoolkit/open_model_zoo.git

cd open_model_zoo

git checkout 2024.6.0

4.5.2 Preparing a Model¶

A model must be converted from a framework (such as TensorFlow, Caffe, or Pytorch) into a pair of .bin and .xml files before the FPGA AI Suite compiler (dla_compiler command) can ingest the model.

The following commands download the ResNet-50 TensorFlow model and run the OpenVINO Open Model Zoo tools with the following commands:

source ~/build-openvino-dev/openvino_env/bin/activate

omz_downloader --name resnet-50-tf \

--output_dir $COREDLA_WORK/demo/models/

omz_converter --name resnet-50-tf \

--download_dir $COREDLA_WORK/demo/models/ \

--output_dir $COREDLA_WORK/demo/models/

The omz_downloader command downloads the trained model to $COREDLA_WORK/demo/models folder. The omz_converter command runs model optimizer that converts the trained model into intermediate representation .bin and .xml files in the $COREDLA_WORK/demo/models/public/resnet-50-tf/FP32/ directory.

The directory $COREDLA_WORK/demo/open_model_zoo/models/public/resnet-50-tf/ contains two useful files that do not appear in the $COREDLA_ROOT/demo/models/ directory tree:

- The README.md file describes background information about the model.

- The

model.ymlfile shows the detailed command-line information given to Model Optimizer (mo.py) when it converts the model to a pair of.binand.xmlfiles

For a list OpenVINO Model Zoo models that the FPGA AI Suite supports, refer to the FPGA AI Suite Handbook.

Troubleshooting OpenVINO Open Model Zoo Converter Errors

You might get the following error while running the omz_converter on a TensorFlow model:

ValueError: Invalid filepath extension for saving. Please add either a '.keras' extension for the native Keras format (recommended) or a '.h5' extension. Use 'model.export(filepath)' if you want to export a SavedModel for use with TFLite/TFServing/etc.

If you get this error, you can follow a process similar to the following example process that convert MobilenetV3 TensorFlow model to an OpenVINO model:

- Run the following Python code that converts MobileNetV3 to Tensorflow

.savedmodelformat:

import os

import tensorflow as tf

COREDLA_WORK = os.environ.get("COREDLA_WORK")

DOWNLOAD_DIR = f"{COREDLA_WORK}/demo/models/"

OUTPUT_DIR = f"{COREDLA_WORK}/demo/models/"

# Set the image data format

tf.keras.backend.set_image_data_format("channels_last")

# Load the MobileNetV3Large model with the specified weights

model = tf.keras.applications.MobileNetV3Large(

weights=str(

f"{DOWNLOAD_DIR}/public/mobilenet-v3-large-1.0-224-tf/weights_mobilenet_v3_large_224_1.0_float.h5"

)

)

# Save the model to the specified output directory

model.export(filepath=f"{OUTPUT_DIR}/mobilenet_v3_large_224_1.0_float.savedmodel")

- Run the following command to convert the TensorFlow

.savedmodelformat to OpenVINO model format:

mo \

--input_model=$COREDLA_WORK/demo/models/mobilenet_v3_large_224_1.0_float.savedmodel \

--model_name=mobilenet_v3_large_224_1.0_float \

--input_shape=[1, 224,224,3]

--layout nhwc

4.5.3 Compiling the Graphs¶

The precompiled SD card image (.wic) provided with the FPGA AI Suite uses one of the following files as the IP architecture configuration file:

- Agilex 5 FPGA E-Series 065B Modular Development Kit

AGX5_Performance.arch - Agilex 7 FPGA I-Series Transceiver-SoC Development Kit

AGX7_Performance_LayoutTransform.arch - Arria 10 SX SoC FPGA Development Kit

A10_Performance.arch

To create the AOT file for the M2M variant (which uses the dla_benchmark utility), run the following command:

cd $COREDLA_WORK/demo/models/public/resnet-50-tf/FP32

dla_compiler \

--march $COREDLA_ROOT/example_architectures/<IP arch config file> \

--network-file ./resnet-50-tf.xml \

--foutput-format=open_vino_hetero \

--o $COREDLA_WORK/demo/RN50_Performance_b1.bin \